|

Hello! I am a first-year MSCS student from Stanford University, specializing in Visual Computing Track. Previously, I received my Bachelor of Applied Science in Computer Engineering from the University of Toronto. I'm interested in Computer Vision, Robotics, and Software Engineering. On the Vision and Robotics side, I wish to integrate visual perceptions into physically plausible actions to bridge the gap between perception and planning, and enable robots to perform complex tasks with strong generalization in the 3D world. On the Software Engineering side, I wish to contribute to collaboration beyond individuals. Email / Linkedin / CV / Google Scholar / Misc |

|

|

|

|

|

|

Stanford University, CA, United States Master of Science in Computer Science 2024 - 2026 |

|

University of Toronto, ON, Canada Bachelor of Applied Science 2019 - 2024 Major in Computer Engineering, minor in Artificial Intelligence CGPA: 3.94/4.0 |

|

|

|

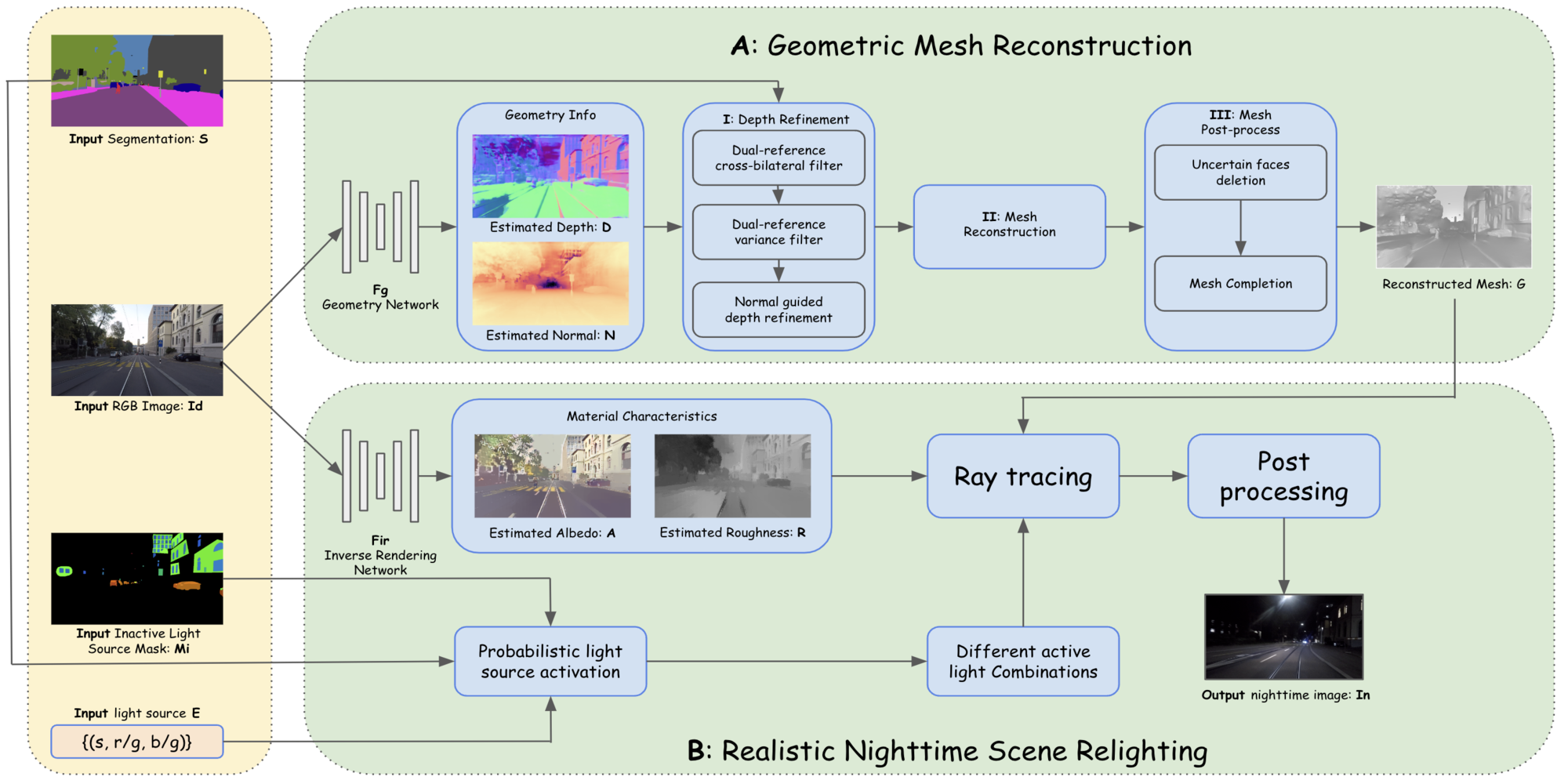

Konstantinos Tzevelekakis, Shutong Zhang, Luc Van Gool, Christos Sakaridis Accepted by the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), 2025 abstract / paper Nighttime scenes are hard to semantically perceive with learned models and annotate for humans. Thus, realistic synthetic nighttime data become all the more important for learning robust semantic perception at night, thanks to their accurate and cheap semantic annotations. However, existing data-driven or hand-crafted techniques for generating nighttime images from daytime counterparts suffer from poor realism. The reason is the complex interaction of highly spatially varying nighttime illumination, which differs drastically from its daytime counterpart, with objects of spatially varying materials in the scene, happening in 3D and being very hard to capture with such 2D approaches. The above 3D interaction and illumination shift have proven equally hard to \emph{model} in the literature, as opposed to other conditions such as fog or rain. Our method, named Sun Off, Lights On (SOLO), is the first to perform nighttime simulation on single images in a photorealistic fashion by operating in 3D. It first explicitly estimates the 3D geometry, the materials and the locations of light sources of the scene from the input daytime image and relights the scene by probabilistically instantiating light sources in a way that accounts for their semantics and then running standard ray tracing. Not only is the visual quality and photorealism of our nighttime images superior to competing approaches including diffusion models, but the former images are also proven more beneficial for semantic nighttime segmentation in day-to-night adaptation. |

|

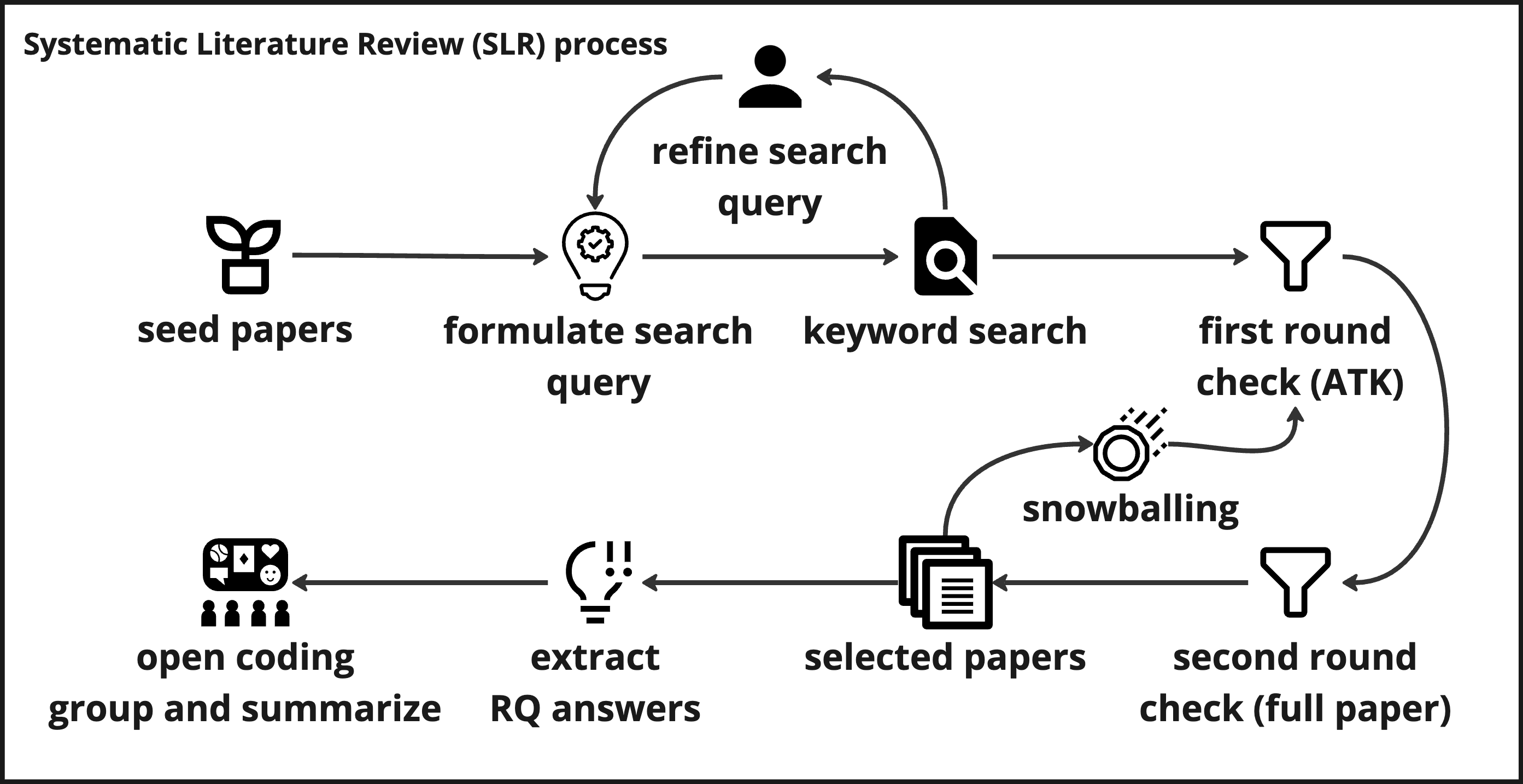

Shutong Zhang, Tianyu Zhang, Jinghui Cheng, Shurui Zhou Accepted by the ACM Conference on Computer-Supported Cooperative Work & Social Computing (CSCW), 2025 abstract / paper (coming soon...) Software development relies on effective collaboration between Software Development Engineers (SDEs) and User eXperience Designers (UXDs) to create software products of high quality and usability. While this collaboration issue has been explored over the past decades, anecdotal evidence continues to indicate the existence of challenges in their collaborative efforts. To understand this gap, we first conducted a systematic literature review of 44 papers published since 2005, uncovering three key collaboration challenges and two main best practices. We then analyzed designer and developer forums and discussions on open-source software repositories to assess how the challenges and practices manifest in the status quo. Our findings have broad applicability for collaboration in software development, extending beyond the partnership between SDEs and UXDs. The suggested best practices and interventions also act as a reference for future research, assisting in the development of dedicated collaboration tools for SDEs and UXDs. |

|

Shutong Zhang Thesis at ETH Zurich Computer Vision Lab abstract / paper / slides Semantic segmentation is an important task for autonomous driving. A powerful autonomous driving system should be capable of handling images under all conditions, including nighttime. Generating accurate and diverse nighttime semantic segmentation datasets is crucial for enhancing the performance of computer vision algorithms in low-light conditions. In this thesis, we introduce a novel approach named NPSim, which enables the simulation of realistic nighttime images from real daytime counterparts with monocular inverse rendering and ray tracing. NPSim comprises two key components: mesh reconstruction and relighting. The mesh reconstruction component generates an accurate representation of the scene’s structure by combining geometric information extracted from the input RGB image and semantic information from its corresponding semantic labels. The relighting component integrates real-world nighttime light sources and material characteristics to simulate the complex interplay of light and object surfaces under low-light conditions. The scope of this thesis mainly focuses on the implementation and evaluation of the mesh reconstruction component. Through experiments, we demonstrate the effectiveness of the mesh reconstruction component in producing high-quality scene meshes and their generality across different autonomous driving datasets. We also propose a detailed experiment plan for evaluating the entire pipeline, including both quantitative metrics in training state-of-the-art supervised and unsupervised semantic segmentation approaches and human perceptual studies, aiming to indicate the capability of our approach to generate realistic nighttime images and the value of our dataset in steering future progress in the field. NPSim not only has the ability to address the scarcity of nighttime datasets for semantic segmentation, but it also has the potential to improve the robustness and performance of vision algorithms under low-lighting conditions. |

|

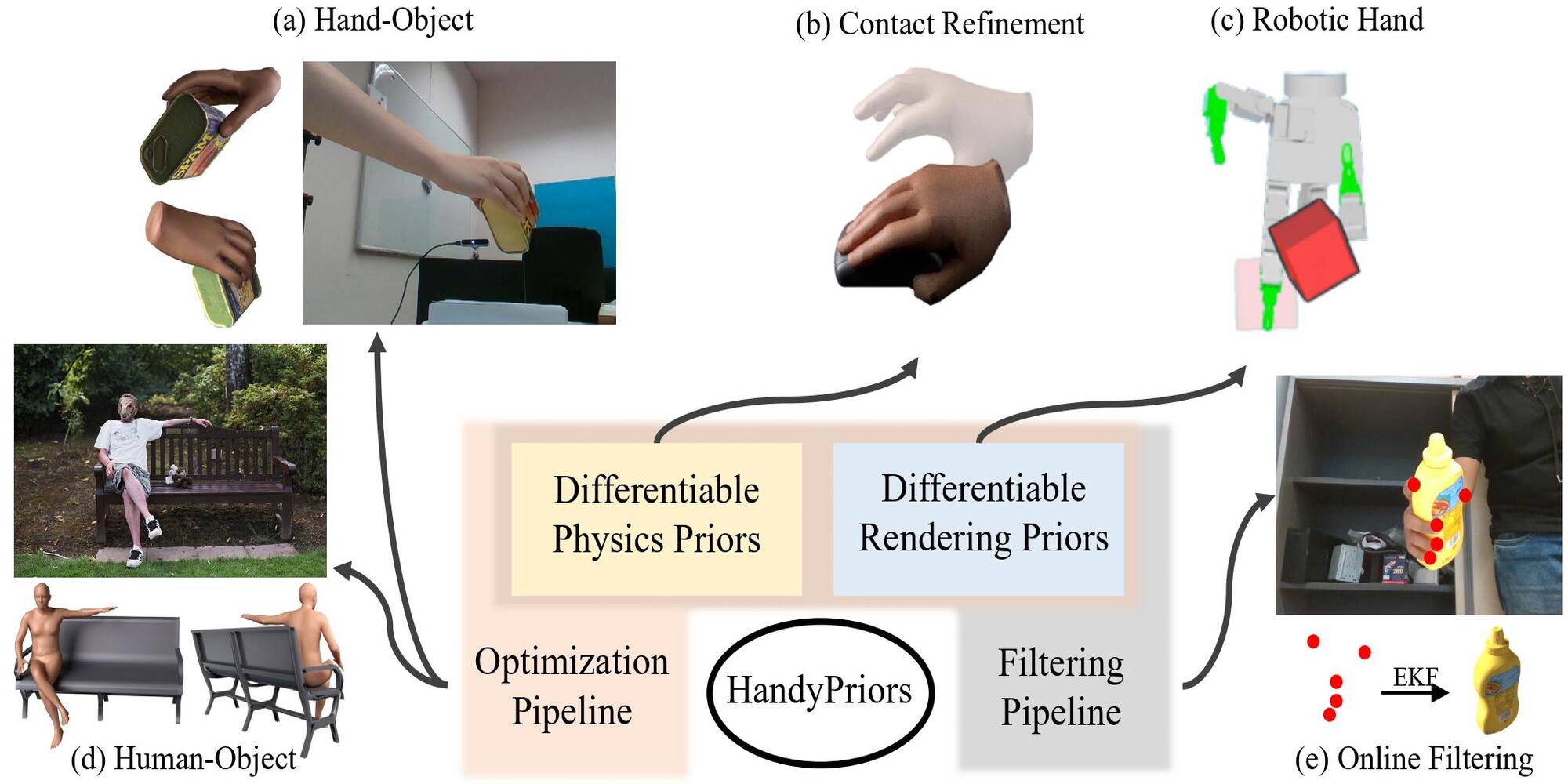

Shutong Zhang*, Yiling Qiao*, Guanglei Zhu*, Eric Heiden, Dylan Turpin, Jingzhou Liu, Ming Lin, Miles Macklin, Animesh Garg Accepted by Conference on Computer Vision and Pattern Recognition Workshop (CVPRW), 2023 Accepted by IEEE International Conference on Robotics and Automation (ICRA), 2024 abstract / paper / project page Various heuristic objectives for modeling hand- object interaction have been proposed in past work. However, due to the lack of a cohesive framework, these objectives often possess a narrow scope of applicability and are limited by their efficiency or accuracy. In this paper, we propose HANDYPRIORS, a unified and general pipeline for human- object interaction scenes by leveraging recent advances in differentiable physics and rendering. Our approach employs rendering priors to align with input images and segmenta- tion masks along with physics priors to mitigate penetration and relative-sliding across frames. Furthermore, we present two alternatives for hand and object pose estimation. The optimization-based pose estimation achieves higher accuracy, while the filtering-based tracking, which utilizes the differen- tiable priors as dynamics and observation models, executes faster. We demonstrate that HANDYPRIORS attains comparable or superior results in the pose estimation task, and that the differentiable physics module can predict contact information for pose refinement. We also show that our approach generalizes to perception tasks, including robotic hand manipulation and human-object pose estimation in the wild. |

|

Dylan Turpin, Tao Zhong, Shutong Zhang, Guanglei Zhu, Eric Heiden, Miles Macklin, Stavros Tsogkas, Sven Dickinson, Animesh Garg Accepted by IEEE International Conference on Robotics and Automation (ICRA), 2023 abstract / paper / project page Multi-finger grasping relies on high quality training data, which is hard to obtain: human data is hard to transfer and synthetic data relies on simplifying assumptions that reduce grasp quality. By making grasp simulation differentiable, and contact dynamics amenable to gradient-based optimization, we accelerate the search for high-quality grasps with fewer limiting assumptions. We present Grasp’D-1M: a large-scale dataset for multi-finger robotic grasping, synthesized with Fast- Grasp’D, a novel differentiable grasping simulator. Grasp’D- 1M contains one million training examples for three robotic hands (three, four and five-fingered), each with multimodal visual inputs (RGB+depth+segmentation, available in mono and stereo). Grasp synthesis with Fast-Grasp’D is 10x faster than GraspIt! and 20x faster than the prior Grasp’D differentiable simulator. Generated grasps are more stable and contact-rich than GraspIt! grasps, regardless of the distance threshold used for contact generation. We validate the usefulness of our dataset by retraining an existing vision-based grasping pipeline on Grasp’D-1M, and showing a dramatic increase in model performance, predicting grasps with 30% more contact, a 33% higher epsilon metric, and 35% lower simulated displacement. |

|

|

|

ETH Zurich Computer Vision Lab , Switzerland 2023.4 - present Research Intern Supervisor: Prof. Luc Van Gool and Dr. Christos Sakaridis |

|

PAIR Lab and Vector Institute , Canada 2022.5 (project start date: 2022.8) - present Research Intern Supervisor: Prof. Animesh Garg, with Prof. Ming C. Lin |

|

Forcolab , Canada 2022.4 (project start date: 2022.5) - 2023.9 Research Intern Supervisor: Prof. Shurui Zhou, with Prof. Jinghui Cheng |

|

|

|

Intel Corporation , Canada 2022.5 - 2023.4 Engineering Intern Quality and Execution Team: Project Manager and Software Engineer Customer Happiness and User Experience Team: Front-End Developer Core Datapath Team: Compiler Engineer |

|

University of Toronto , Canada 2021.9 - 2023.4 Teaching Assistant ECE253 Digital and Computer Systems - Fall 2021, Fall 2022 ECE243 Computer Organization - Winter 2022, Winter 2023 Supervisor: Prof. Natalie Enright Jerger, Prof. Jonathan Rose |

|

|

|

|

|

Template borrowed from here. |